Communicating in a world redefined by AI

Artificial intelligence has quickly moved from futuristic promise to present-day reality, reshaping how we work, connect and communicate. That shift was at the centre of Vuelio’s recent event, Communicating in a world redefined by AI, which brought together comms leaders to explore the opportunities and risks of this new era.

The discussion highlighted the dual narratives currently shaping AI: on one side, optimism about its role as the driver of a new industrial revolution, fuelling productivity and growth; on the other, scepticism about its potential to erode trust, creativity and even democracy.

We interviewed André Labadie Exec Chair, Business & Technology for Brands2Life and Vuelio’s Head of Insights Amy Chappell after the event to unpack their perspectives further.

The narratives defining AI today

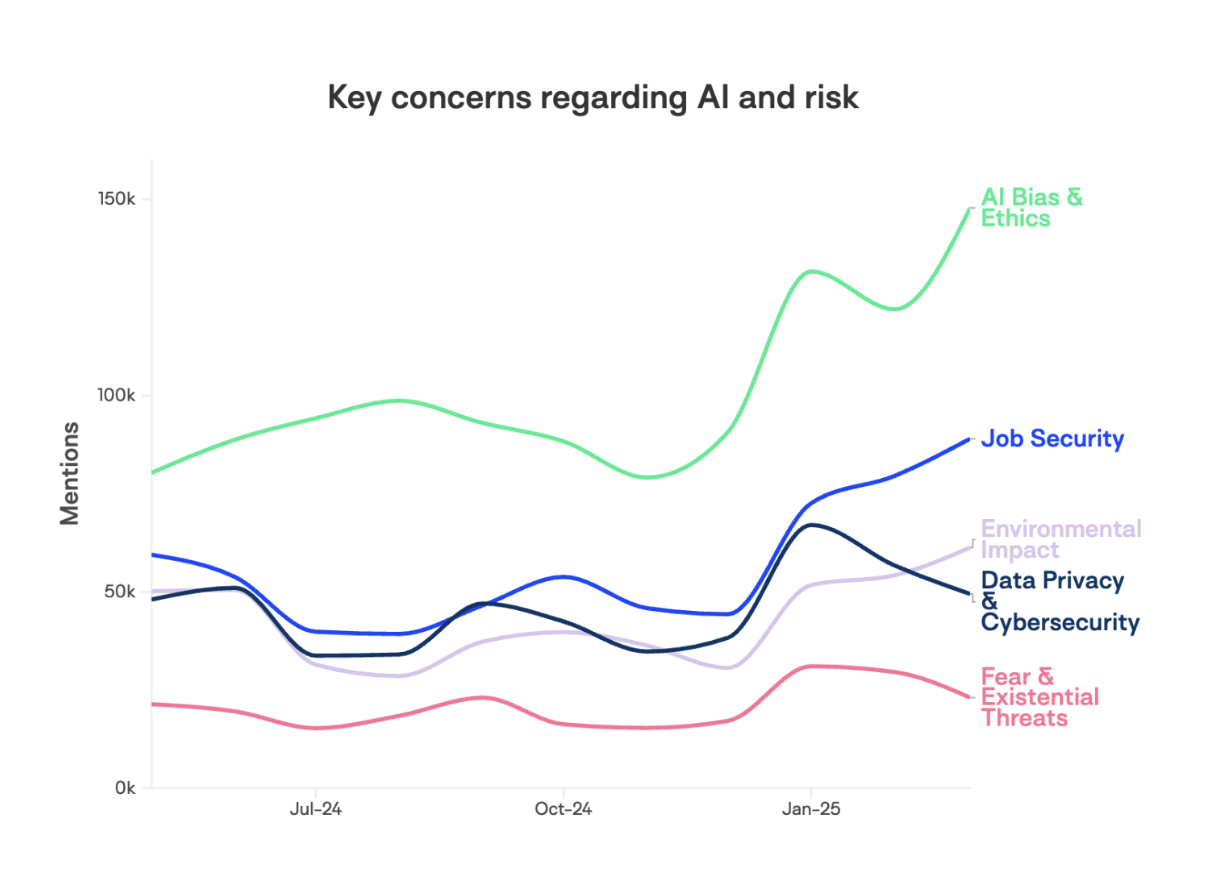

The event began by asking a simple but pressing question: how is AI being framed in our conversations right now?

According to André, two narratives dominate. On one side, there is enormous enthusiasm: AI as the driver of the next industrial revolution, promising growth, productivity and creativity at scale. “It’s the idea that AI is going to power the next industrial revolution, a major engine of productivity, creativity and economic growth. Technology firms, consultancies, governments all want to push that message, and there’s real substance behind it.”

On the other, there is caution that recognises that these technologies also come with risks to trust, creativity, and even democratic processes. “We’ve moved on from the Robocop-style stories of killer robots,” André explained, “but there are still concerns. Whether around misinformation, ethical use, or how reliant on automation we should allow ourselves to become.”

This tension of optimism versus scepticism is playing out across media coverage, political debate, and popular culture. Communicators now face the challenge of addressing both sides with honesty and balance.

Where media and public diverge

The event also explored where media and public opinion align on AI, and where they diverge.

At a macro level, both groups are aligned on jobs and ethics: whether roles will be displaced or reshaped by AI, and whether its applications are ethical. But beyond that, priorities differ.

“From a media lens, there’s a preoccupation with big-picture risks,” André says. “But the public, as employees or consumers, are more concerned with pragmatic and personal issues — can my child use AI safely for homework? Can I trust the reviews I’m reading online?”

This divergence matters for communicators. Messaging that plays well in the press may not resonate with employees or customers. Equally, public anxieties that appear small-scale can quickly become reputational flashpoints.

AI as solution as well as risk

Amy added an important counterpoint: AI may cause problems, but it can also be part of the solution. “It’s almost ironic, isn’t it — using AI to fix AI problems,” she reflected. “But AI can help detect misinformation, catch fake quotes, and verify sources. We’ve seen misquotes in the media around medical advice, and being able to use AI to spot and correct those quickly is vital.”

She also pointed to AI’s ability to process vast volumes of data: “From an analyst perspective, it can help us act quicker. If there’s a narrative playing out in the media, AI can help us understand what’s going on and deal with issues before they escalate.”

This dual role — both a risk factor and a potential safeguard — is central to how communicators should frame AI internally and externally.

Key challenges for communicators

For communicators trying to find their footing, André outlined three key challenges:

- Avoiding sensationalism. The hype cycle means every brand feels pressure to make statements on AI. “There are a lot of so-called thought leaders with strong opinions,” André noted. “The challenge is cutting through with something grounded and valuable.”

- Adding substance. Research shows most major companies now reference AI in their annual reports, but few explain how it has genuinely improved their operations. That gap between rhetoric and reality risks hollowing out trust.

- Maintaining credibility. Brands that present themselves as human-first but secretly rely on automation risk being caught out. Transparency, he argued, is the safest strategy.

André highlights Puma, the sportswear brand, faced criticism for releasing an AI-generated campaign despite its identity being rooted in human performance. Outsourcing to automation felt discordant and undermined credibility. By contrast, LinkedIn rolled out AI-generated job descriptions and career advice with clear labelling and careful positioning. “They presented it as a co-pilot rather than a replacement,” André says. “Consumers knew it was AI-driven, they understood its limitations, and they were more forgiving when mistakes occurred.”

The rise of new comms channels

As AI changes not just messaging but also the information ecosystem, the question of channels is increasingly pressing.

For André, executive communications remain key for signalling authenticity. Employees, too, are crucial — often the first to amplify or critique how AI is used internally.

And interactive forums matter more than ever. “Reddit AMAs, Q&As — those have a double benefit,” he explained. “Not only do they engage audiences directly, but AI-powered search engines and large language models are increasingly over-indexing on content from those spaces.”

This led to wider discussion on phenomena like “Google Zero”, where AI-driven summaries reduce traffic to news websites. As André pointed out, this is a business model crisis for publishers — but also a challenge for communicators, who must ensure brand content surfaces in the discursive spaces where AI is now sourcing information.

Keeping humans in the loop

Both panellists stressed the importance of human oversight.

Amy highlighted the need for governance and scepticism when working with vendors: “Don’t use AI outputs as the finished article. Keep humans involved in verification, and don’t overtrust vendors who overpromise.”

She also emphasised the role of human spokespeople: “If you have a person quoted in an article or broadcast, you maintain a level of trust that an AI output alone can’t offer.”

André echoed this, pointing to the risk of “slop” — AI-generated work that looks polished but lacks depth. “The differentiator is still judgment,” he argued. “What makes communication stand out is originality, cultural awareness and human connection.”

The future of junior comms roles

The conversation turned to how AI will reshape entry-level and mid-level roles. André was clear: junior communicators must still be trained in the fundamentals. “Graduates are tomorrow’s middle managers. If we don’t train them properly now, we’ll face a crisis later,” he warned.

He also predicted new skillsets: “We’ll all become orchestrators of agents. Rather than going to a colleague for help, you’ll go to AI agents. So junior staff will need to understand how to stitch technologies together and manage processes as well as people.”

Amy agreed, emphasising the need for critical thinking: “If we don’t train graduates, they won’t know how to interrogate AI outputs. Critical thinking and strategic oversight are going to be essential skills.”

Pitfalls to avoid

Over-reliance was a recurring theme. Amy warned against being dazzled by tools that overpromise: “If it seems too good to be true, it probably is. No tool can do everything — so don’t integrate anything you can’t fully trust.”

André added that organisations often try to move too fast: “Don’t boil the ocean. Start with a subset of workflows, experiment, and build from there.”

Both panellists agreed that while AI can streamline workflows, it cannot replace human creativity, judgment and accountability.

Experiment boldly, communicate authentically

The overarching takeaway from the event is that AI is here to stay — but its impact will be defined by how organisations choose to use it.

Experimentation is important, but so are transparency and authenticity. As Amy noted, combining AI-driven monitoring with human oversight is key to managing fast-moving narratives. As André stressed, an “okay” AI-generated pitch won’t win new business — originality and distinctiveness still matter most.

Artificial intelligence may be redefining the landscape, but it is human judgment that will continue to shape the stories worth telling.

Leave a Comment